Kubernetes 运行虚拟机的网络问题

如何保证访问 Pod 的流量转发给虚拟机

KubeVirt 使用 Pod 运行虚拟机,运行虚拟机很容易,运行个进程就行,但是如何保证流量能够正确的从 Pod 到虚拟机?

如何实现虚拟机多个网卡

虚拟机肯定不会只有一个网卡,那么 KubeVirt 如何实现虚拟机多个网卡?

简单说下

我们知道 Kubernetes 启动的 Pod 地址都是随机的,并且每次 Pod 重启地址都会变化。Kubernetes 通过 Service 或 Headless Service 来暴漏 Pod,然后可以通过 Service 或 Headless Service 来访问 Pod。

然后 KubeVirt 可以利用网桥或者 nftables 规则实现流量转发,保证到达 Pod 的流量能够全部发送给虚拟机,这样就保证虚拟机是可以访问的。

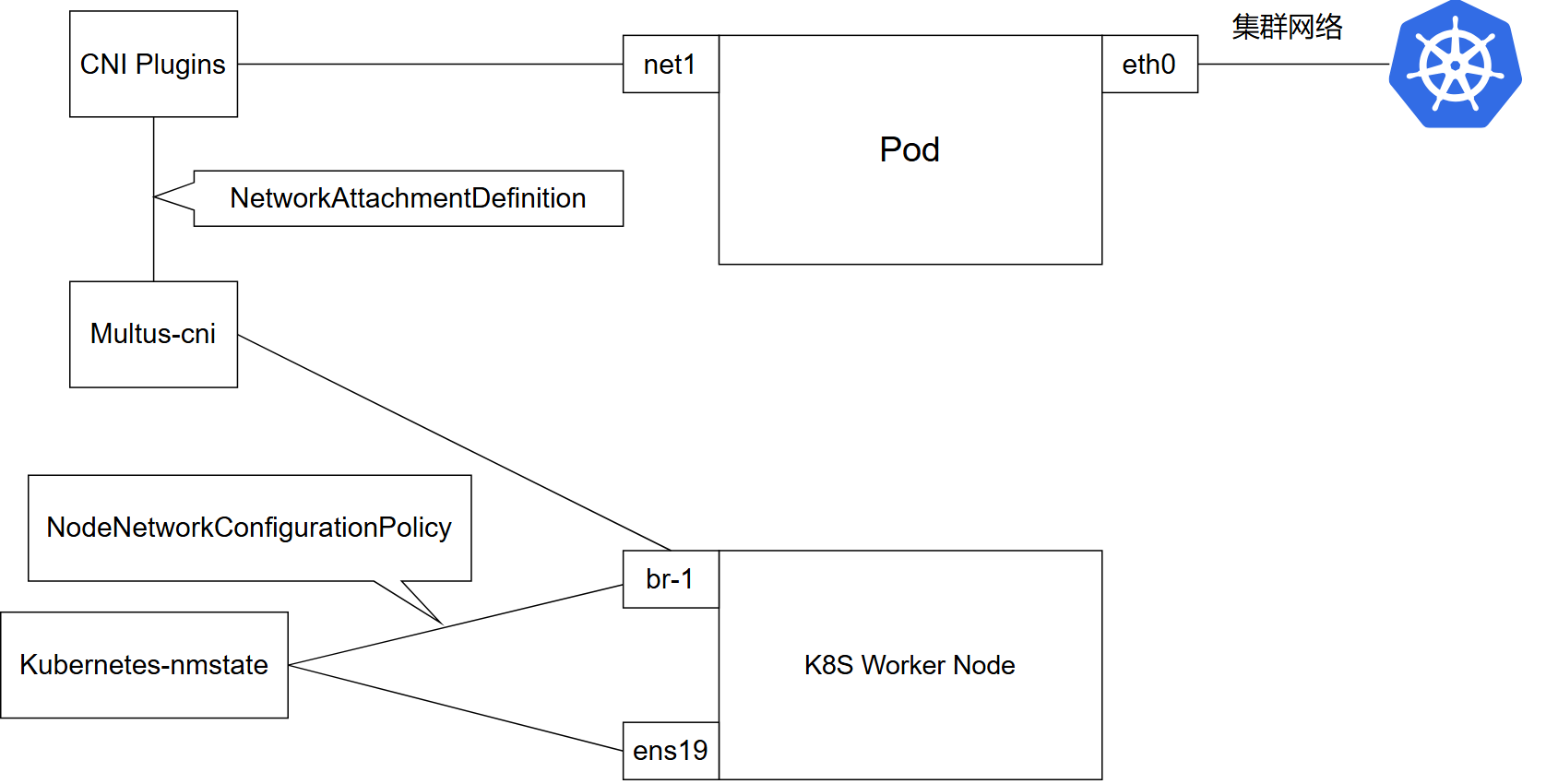

对于添加网卡,之前写过如何用 NMstate 和 Multus 为 Pod 添加网卡,KubeVirt 支持利用这两个组件来为虚拟机添加网卡。并且,Pod 的地址是不断变化的,但是虚拟机肯定不希望网卡地址是一直变化的,通过添加网卡这个方式就可以保证虚拟机地址是固定的。

这个方法不仅仅只是一个开源的解决方案,在 KubeVirt 的商业版产品 Openshift Virtualization 中也是用这个方式实现虚拟机的网卡添加的。

KubeVirt 虚拟机支持的网卡类型

KubeVIrt 的虚拟机有如下几个网卡类型

- masquerade

- bridge

- sriov

这里常用的是前两个。

| 类型 | 描述 |

|---|---|

bridge |

使用 Linux 网桥进行连接 |

sriov |

通过 vfio 使用直通 SR-IOV VF 进行连接 |

masquerade |

使用 nftables 规则将流量连接到出口和入口的 NAT |

一般情况下,创建虚拟机时,虚拟机网卡默认为 bridge 模式,且虚拟机第一个网卡默认和 Pod 网卡关联。

Masquerade 模式

Masquerade 模式下的地址状态

现在有一个虚拟机 vm-alpine,网卡为 Masquerade 模式。

[root@base-k8s-master-1 ~]# kubectl get vm vm-alpine -o jsonpath='{.spec.template.spec.domain.devices}' | jq

{

"disks": [

{

"disk": {

"bus": "virtio"

},

"name": "containerdisk"

}

],

"interfaces": [

{

"masquerade": {},

"model": "virtio",

"name": "default"

}

]

}查看运行虚拟机 Pod 的网卡信息:

[root@base-k8s-master-1 ~]# kubectl exec -it virt-launcher-vm-alpine-gs5mx -- ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

3: eth0@if17: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1480 qdisc noqueue state UP group default qlen 1000

link/ether 7a:50:cd:02:bf:9d brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.100.223.30/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::7850:cdff:fe02:bf9d/64 scope link

valid_lft forever preferred_lft forever

4: k6t-eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1480 qdisc noqueue state UP group default qlen 1000

link/ether 02:00:00:00:00:00 brd ff:ff:ff:ff:ff:ff

inet 10.0.2.1/24 brd 10.0.2.255 scope global k6t-eth0

valid_lft forever preferred_lft forever

inet6 fe80::ff:fe00:0/64 scope link

valid_lft forever preferred_lft forever

5: tap0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1480 qdisc fq_codel master k6t-eth0 state UP group default qlen 1000

link/ether 96:3d:3c:22:61:29 brd ff:ff:ff:ff:ff:ff

inet6 fe80::943d:3cff:fe22:6129/64 scope link

valid_lft forever preferred_lft forever

[root@base-k8s-master-1 ~]# kubectl exec -it virt-launcher-vm-alpine-gs5mx -- ip route

default via 169.254.1.1 dev eth0

10.0.2.0/24 dev k6t-eth0 proto kernel scope link src 10.0.2.1

169.254.1.1 dev eth0 scope link查看虚拟机内的网卡信息:

[root@base-k8s-master-1 ~]# virtctl console vm-alpine

Successfully connected to vm-alpine console. The escape sequence is ^]

$ ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1480 qdisc pfifo_fast qlen 1000

link/ether de:f1:44:95:72:ec brd ff:ff:ff:ff:ff:ff

inet 10.0.2.2/24 brd 10.0.2.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::dcf1:44ff:fe95:72ec/64 scope link

valid_lft forever preferred_lft foreverPod 内有一个 10.0.2.1/24 的网卡 k6t-eth0,也有路由 10.0.2.0/24 dev k6t-eth0 proto kernel scope link src 10.0.2.1,虚拟机的地址是 10.0.2.2/24。

可以看到虚拟机的网卡地址和 Pod 的不一样,访问虚拟机的时候中间会有 nft 将流量转发给虚拟机。

查看 nft 规则

# 查看虚拟机所在节点

[root@base-k8s-master-1 ~]# kubectl get vmi

NAME AGE PHASE IP NODENAME READY

vm-alpine 43h Running 10.100.223.30 base-k8s-worker-1.example.com True

# 查看虚拟机进程

[root@base-k8s-worker-1 ~]# ps -ef | grep qemu | grep vm-alpine

...output omitted...

qemu 116859 116552 0 Mar07 ? 00:03:29 /usr/libexec/qemu-kvm -name guest=default_vm-alpine,debug-threads=on ......

# 进入对应进程的网络 namespace

[root@base-k8s-worker-1 ~]# nsenter -t 116859 -n

# 查看 nft 规则

[root@base-k8s-worker-1 ~]# nft list ruleset

table ip nat {

chain prerouting {

type nat hook prerouting priority dstnat; policy accept;

iifname "eth0" counter packets 3 bytes 180 jump KUBEVIRT_PREINBOUND

}

chain input {

type nat hook input priority 100; policy accept;

}

chain output {

type nat hook output priority -100; policy accept;

ip daddr 127.0.0.1 counter packets 0 bytes 0 dnat to 10.0.2.2

}

chain postrouting {

type nat hook postrouting priority srcnat; policy accept;

ip saddr 10.0.2.2 counter packets 0 bytes 0 masquerade

oifname "k6t-eth0" counter packets 6 bytes 340 jump KUBEVIRT_POSTINBOUND

}

chain KUBEVIRT_PREINBOUND {

counter packets 3 bytes 180 dnat to 10.0.2.2

}

chain KUBEVIRT_POSTINBOUND {

ip saddr 127.0.0.1 counter packets 0 bytes 0 snat to 10.0.2.1

}

}通过这些规则可以实现,访问 Pod 的 127.0.0.1 时,流量自动转发给 10.0.2.2,也就是虚拟机。

什么时候选择 Masquerade 模式?

使用 Pod 默认网卡的虚拟机网卡使用 Masquerade 模式。

在 Openshift Virtualization 中,要求虚拟机默认的连接 Pod 的网卡必须是 Masquerade 模式。

Bridge 模式

Bridge 模式下的地址状态

现在有一个虚拟机 vm-bridge,网卡为 Bridge 模式。

[root@base-k8s-master-1 kubevirt]# kubectl get vm vm-bridge -o jsonpath='{.spec.template.spec.domain.devices}' | jq

{

"disks": [

{

"disk": {

"bus": "virtio"

},

"name": "containerdisk"

}

],

"interfaces": [

{

"bridge": {},

"model": "virtio",

"name": "default"

}

]

}查看运行虚拟机 Pod 的网卡信息:

[root@base-k8s-master-1 kubevirt]# kubectl exec -it virt-launcher-vm-bridge-gdlf8 -- ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

3: eth0-nic@if110: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1480 qdisc noqueue master k6t-eth0 state UP group default qlen 1000

link/ether ca:da:2e:fb:67:28 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::c8da:2eff:fefb:6728/64 scope link

valid_lft forever preferred_lft forever

4: k6t-eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1480 qdisc noqueue state UP group default qlen 1000

link/ether 16:98:43:25:4f:2c brd ff:ff:ff:ff:ff:ff

inet 169.254.75.10/32 scope global k6t-eth0

valid_lft forever preferred_lft forever

inet6 fe80::c8da:2eff:fefb:6728/64 scope link

valid_lft forever preferred_lft forever

5: tap0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1480 qdisc fq_codel master k6t-eth0 state UP group default qlen 1000

link/ether 16:98:43:25:4f:2c brd ff:ff:ff:ff:ff:ff

inet6 fe80::1498:43ff:fe25:4f2c/64 scope link

valid_lft forever preferred_lft forever

6: eth0: <BROADCAST,NOARP> mtu 1480 qdisc noop state DOWN group default qlen 1000

link/ether 9e:ca:72:f5:d2:cd brd ff:ff:ff:ff:ff:ff

inet 10.100.171.29/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::9cca:72ff:fef5:d2cd/64 scope link

valid_lft forever preferred_lft forever查看虚拟机内的网卡信息:

[root@base-k8s-master-1 kubevirt]# virtctl console vm-bridge

Successfully connected to vm-bridge console. The escape sequence is ^]

$ ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1480 qdisc pfifo_fast qlen 1000

link/ether 9e:ca:72:f5:d2:cd brd ff:ff:ff:ff:ff:ff

inet 10.100.171.29/32 brd 10.255.255.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::9cca:72ff:fef5:d2cd/64 scope link

valid_lft forever preferred_lft forever这个模式下虚拟机地址和 Pod 的一样,所有网卡都是通过 bridge 的方式连接。(这里有个奇怪的地方,Pod 里能看到一个和虚拟机网卡 MAC 地址一样的网卡,不知道为什么会存在这个网卡。)

[root@base-k8s-master-1 ~]# kubectl exec -it virt-launcher-vm-bridge-gdlf8 -- /bin/bash

bash-5.1$ virsh domiflist default_vm-bridge

Authorization not available. Check if polkit service is running or see debug message for more information.

Interface Type Source Model MAC

------------------------------------------------------------------------------

tap0 ethernet - virtio-non-transitional 9e:ca:72:f5:d2:cd

bash-5.1$ /tmp/brctl show

bridge name bridge id STP enabled interfaces

k6t-eth0 8000.169843254f2c no eth0-nic

tap0和 Masquerade 不同,Bridge 模式的 Pod 没有路由信息,ip route 为空。

[root@base-k8s-master-1 kubevirt]# kubectl exec -it virt-launcher-vm-bridge-gdlf8 -- ip route什么情况下选择 Bridge 模式?

一般除了第一块默认网卡外,其他的都用 Bridge 模式。

网卡 MAC 检测的 nftables 规则

之前在创建 NetworkAttachmentDefinition 的时候设置了 macspoofchk,表示开启 MAC 地址检查。

apiVersion: k8s.cni.cncf.io/v1

kind: NetworkAttachmentDefinition

metadata:

name: linux-bridge-br-1

namespace: default

spec:

config: '{

"name":"br-1",

"type":"bridge",

"cniVersion":"0.3.1",

"bridge":"br-1",

"macspoofchk":true,

"ipam": {

"type": "host-local",

"subnet": "192.168.255.0/24",

"routes": [

{

"dst": "0.0.0.0/0",

"gw": "192.168.255.254"

}

]

},

"preserveDefaultVlan":false

}'我们来看看 nftables 规则,当某个物理节点运行了带有 MAC 检测功能的网卡的 Pod 时,会添加 nftables 规则:

[root@base-k8s-master-1 ~]# ssh base-k8s-worker-1

[root@base-k8s-worker-1 ~]# nft list ruleset

...output omitted...

table bridge nat {

chain PREROUTING {

type filter hook prerouting priority dstnat; policy accept;

iifname "vethe686a67c" jump cni-br-iface-cd8cc64d3f7f8fbd5027610a4ab78dec0c94479401005c760079613f112c25ad-pod6c270ef2f25 comment "macspoofchk-cd8cc64d3f7f8fbd5027610a4ab78dec0c94479401005c760079613f112c25ad-pod6c270ef2f25"

}

chain cni-br-iface-cd8cc64d3f7f8fbd5027610a4ab78dec0c94479401005c760079613f112c25ad-pod6c270ef2f25 {

jump cni-br-iface-cd8cc64d3f7f8fbd5027610a4ab78dec0c94479401005c760079613f112c25ad-pod6c270ef2f25-mac comment "macspoofchk-cd8cc64d3f7f8fbd5027610a4ab78dec0c94479401005c760079613f112c25ad-pod6c270ef2f25"

}

chain cni-br-iface-cd8cc64d3f7f8fbd5027610a4ab78dec0c94479401005c760079613f112c25ad-pod6c270ef2f25-mac {

ether saddr 7e:b2:29:d6:66:b3 return comment "macspoofchk-cd8cc64d3f7f8fbd5027610a4ab78dec0c94479401005c760079613f112c25ad-pod6c270ef2f25"

drop comment "macspoofchk-cd8cc64d3f7f8fbd5027610a4ab78dec0c94479401005c760079613f112c25ad-pod6c270ef2f25"

}

}配置虚拟机网卡模式为 Masquerade 模式

虚拟机默认网卡模式为 bridge。

虚拟机的默认网卡(第一个网卡)一般是和 Pod 的网卡相关联,一般这个网卡需要修改为 Masquerade 模式。

虚拟机的 YAML 文件添加或修改成如下字段:

[root@base-k8s-master-1 kubevirt]# kubectl get vm vm-masquerade -o yaml

...output omitted...

spec:

template:

spec:

domain:

devices:

interfaces:

- masquerade: {}

model: virtio

name: default

networks:

- name: default

pod: {}重启虚拟机。

虚拟机网卡热插拔

https://kubevirt.io/user-guide/network/hotplug_interfaces/#hotplug-network-interfaces

先说三条官网的要求:

- KubeVirt 支持将网络接口热插拔到运行中的虚拟机(VM)上。

- 使用 virtio 模型通过网桥绑定或 SR-IOV 绑定连接的接口支持热插拔。

- 只有通过网桥绑定连接的接口才支持热拔插。

v1.4 之前的版本需要开启

HotplugNICs特性门控。版本 v0.59.0 及更低版本的现有虚拟机不支持热拔出接口。

再说说对网络组件的要求:

- Multus 动态网络控制器:该守护进程将侦听注释更改,并触发 Multus 为该 pod 配置新附件。

- Multus CNI 作为厚插件运行:该 Multus 版本提供了一个端点,可按需为指定 pod 创建附件。

如果没有 Multus 条件,可以尝试使用热迁移的方式添加,热迁移能保证虚拟机不中断的情况下重建 Pod。

部署 multus-dynamic-networks-controller

之前写过部署 Kubernetes-nmstate 和 Multus-cni 以实现为 Pod 添加网卡。这个实现了网卡添加,但是只能在 Pod 创建的时候添加网卡,所以还需要补充一个 multus-dynamic-networks-controller。

Multus Dynamic Networks Controller 是 Multus CNI 的一个扩展组件,它的核心功能是动态管理 Pod 的附加网络,在 Pod 运行时添加或删除网络接口,而无需重启 Pod。

[root@base-k8s-master-1 multus-cni]# git clone https://github.com/k8snetworkplumbingwg/multus-dynamic-networks-controller.git

Cloning into 'multus-dynamic-networks-controller'...

remote: Enumerating objects: 14249, done.

remote: Counting objects: 100% (1032/1032), done.

remote: Compressing objects: 100% (397/397), done.

remote: Total 14249 (delta 758), reused 634 (delta 634), pack-reused 13217 (from 4)

Receiving objects: 100% (14249/14249), 19.26 MiB | 2.98 MiB/s, done.

Resolving deltas: 100% (6398/6398), done.

[root@base-k8s-master-1 multus-cni]# cd multus-dynamic-networks-controller/

[root@base-k8s-master-1 multus-dynamic-networks-controller]# kubectl apply -f manifests/dynamic-networks-controller.yaml

clusterrole.rbac.authorization.k8s.io/dynamic-networks-controller created

clusterrolebinding.rbac.authorization.k8s.io/dynamic-networks-controller created

serviceaccount/dynamic-networks-controller created

configmap/dynamic-networks-controller-config created

daemonset.apps/dynamic-networks-controller-ds created为虚拟机添加新的网卡

在 VM 的 YAML 文件下添加字段:

[root@base-k8s-master-1 kubevirt]# kubectl get vm vm-masquerade -o yaml

...output omitted...

spec:

template:

spec:

domain:

devices:

interfaces:

- masquerade: {}

model: virtio

name: default

- name: net1

model: virtio

bridge: {}

networks:

- name: default

pod: {}

- name: net1

multus:

networkName: linux-bridge-br-1查看 VMI 信息:

[root@base-k8s-master-1 ~]# kubectl get vmi vm-masquerade -ojsonpath="{ @.status.interfaces }" | jq

[

{

"infoSource": "domain",

"ipAddress": "10.100.171.36",

"ipAddresses": [

"10.100.171.36"

],

"mac": "ba:66:90:ab:4d:5d",

"name": "default",

"queueCount": 1

},

{

"infoSource": "domain, multus-status",

"ipAddress": "192.168.255.106",

"ipAddresses": [

"192.168.255.106"

],

"mac": "7a:2d:f9:35:a6:5e",

"name": "net1",

"queueCount": 1

}

]查看虚拟机网卡信息:

[root@base-k8s-master-1 ~]# virtctl console vm-masquerade

Successfully connected to vm-masquerade console. The escape sequence is ^]

$ ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1480 qdisc pfifo_fast qlen 1000

link/ether ba:66:90:ab:4d:5d brd ff:ff:ff:ff:ff:ff

inet 10.0.2.2/24 brd 10.0.2.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::b866:90ff:feab:4d5d/64 scope link

valid_lft forever preferred_lft forever

3: eth1: <BROADCAST,MULTICAST> mtu 1500 qdisc noop qlen 1000

link/ether 7a:2d:f9:35:a6:5e brd ff:ff:ff:ff:ff:ff可以看到多了一个网卡。

再查看下 Pod 网卡信息:

[root@base-k8s-master-1 ~]kubectl exec -it virt-launcher-vm-masquerade-tmlft -- ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

3: eth0@if134: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1480 qdisc noqueue state UP group default qlen 1000

link/ether ba:66:90:ab:4d:5d brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.100.171.36/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::b866:90ff:feab:4d5d/64 scope link

valid_lft forever preferred_lft forever

4: k6t-eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1480 qdisc noqueue state UP group default qlen 1000

link/ether 02:00:00:00:00:00 brd ff:ff:ff:ff:ff:ff

inet 10.0.2.1/24 brd 10.0.2.255 scope global k6t-eth0

valid_lft forever preferred_lft forever

inet6 fe80::ff:fe00:0/64 scope link

valid_lft forever preferred_lft forever

5: tap0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1480 qdisc fq_codel master k6t-eth0 state UP group default qlen 1000

link/ether aa:df:af:de:1d:bb brd ff:ff:ff:ff:ff:ff

inet6 fe80::a8df:afff:fede:1dbb/64 scope link

valid_lft forever preferred_lft forever

6: 6c270ef2f25-nic@if135: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master k6t-6c270ef2f25 state UP group default

link/ether be:fa:93:c3:ed:86 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::bcfa:93ff:fec3:ed86/64 scope link

valid_lft forever preferred_lft forever

7: k6t-6c270ef2f25: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 9e:df:7f:90:61:e8 brd ff:ff:ff:ff:ff:ff

inet 169.254.75.10/32 scope global k6t-6c270ef2f25

valid_lft forever preferred_lft forever

inet6 fe80::bcfa:93ff:fec3:ed86/64 scope link

valid_lft forever preferred_lft forever

8: tap6c270ef2f25: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel master k6t-6c270ef2f25 state UP group default qlen 1000

link/ether 9e:df:7f:90:61:e8 brd ff:ff:ff:ff:ff:ff

inet6 fe80::9cdf:7fff:fe90:61e8/64 scope link

valid_lft forever preferred_lft forever

9: pod6c270ef2f25: <BROADCAST,NOARP> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 7a:2d:f9:35:a6:5e brd ff:ff:ff:ff:ff:ff

inet 192.168.255.106/24 brd 192.168.255.255 scope global pod6c270ef2f25

valid_lft forever preferred_lft forever

inet6 fe80::782d:f9ff:fe35:a65e/64 scope link

valid_lft forever preferred_lft forever如果发现没生效可以先用

virtctl restart重启虚拟机看看,这个方法重启会重建 Pod。

虚拟机网卡热删除

在需要删除的网卡上添加一个字段 state: absent。

[root@base-k8s-master-1 ~]# kubectl edit vm vm-masquerade

...output omitted...

spec:

template:

spec:

domain:

devices:

interfaces:

...output omitted...

- bridge: {}

model: virtio

name: net1

state: absent # 添加这个然后去检查虚拟机:

[root@base-k8s-master-1 ~]# virtctl console vm-masquerade

Successfully connected to vm-masquerade console. The escape sequence is ^]

$ ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1480 qdisc pfifo_fast qlen 1000

link/ether 7e:57:47:45:cc:3a brd ff:ff:ff:ff:ff:ff

inet 10.0.2.2/24 brd 10.0.2.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::7c57:47ff:fe45:cc3a/64 scope link

valid_lft forever preferred_lft forever再查看 Pod 的网卡:

[root@base-k8s-master-1 ~]# kubectl exec -it virt-launcher-vm-masquerade-fqvj4 -- ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

3: eth0@if89: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1480 qdisc noqueue state UP group default qlen 1000

link/ether 4a:73:68:69:c0:2f brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.100.223.52/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::4873:68ff:fe69:c02f/64 scope link

valid_lft forever preferred_lft forever

4: 6c270ef2f25-nic@if90: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 46:ae:57:a9:fa:b6 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::44ae:57ff:fea9:fab6/64 scope link

valid_lft forever preferred_lft forever

5: k6t-eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1480 qdisc noqueue state UP group default qlen 1000

link/ether 02:00:00:00:00:00 brd ff:ff:ff:ff:ff:ff

inet 10.0.2.1/24 brd 10.0.2.255 scope global k6t-eth0

valid_lft forever preferred_lft forever

inet6 fe80::ff:fe00:0/64 scope link

valid_lft forever preferred_lft forever

6: tap0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1480 qdisc fq_codel master k6t-eth0 state UP group default qlen 1000

link/ether 66:f8:14:63:d4:15 brd ff:ff:ff:ff:ff:ff

inet6 fe80::64f8:14ff:fe63:d415/64 scope link

valid_lft forever preferred_lft forever