为什么要为 Pod 添加第二块网卡

Kubernetes 的 Pod 默认只有一个网卡。假设一个场景,Pod 有管理流量和业务流量,一个网卡是没办法满足流量隔离的,这个时候就需要为 Pod 添加多个网卡实现流量的隔离。

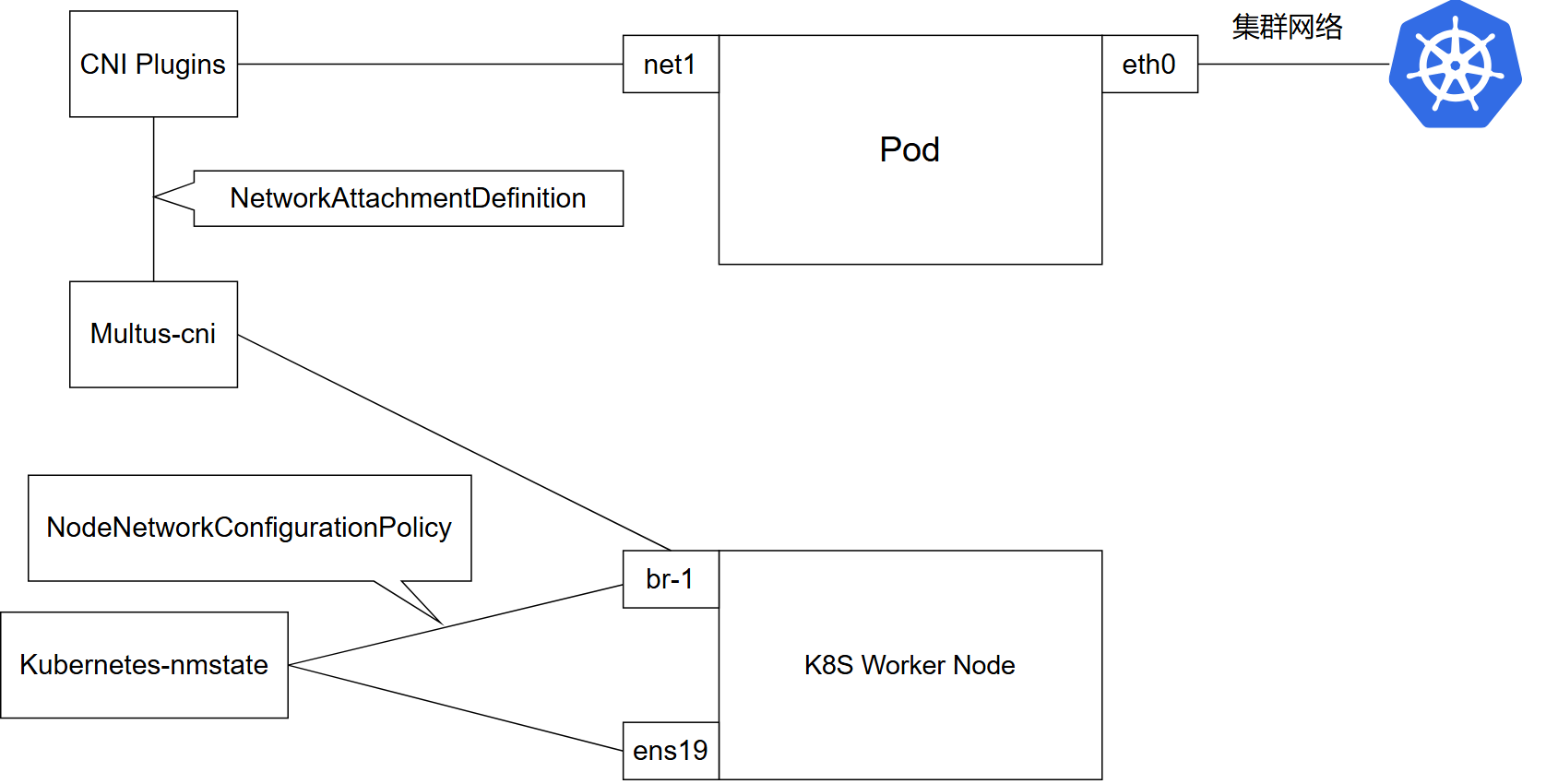

实现 Pod 第二块网卡需要的组件

需要两个组件:

-

Kubernetes-nmstate:用于 Kubernete 网络配置管理,通过 NetworkManager 来配置和管理物理网口,可以创建网桥,可以创建 bond。

-

Multus-cni:可以为 Pod 添加多个网卡。

Kubernetes-nmstate 负责为 K8S 物理节点的网卡创建网桥,Multus-cni 通过创建的网桥为 Pod 添加第二块网卡。

画个图(我感觉图应该没画错。):

假设物理节点有一个 ens19 网卡,通过 Kubernetes-nmstate 可以为 ens19 创建一个名为 br-1 网桥,然后 Multus-cni 就可以通过 br-1 网桥为 Pod 添加网卡。

最终实现的效果就是新 Pod 会有两块网卡,一个是 eth0,是集群内部的网络,另一个是 net1,通过 br-1 连接到 ens19 物理网络。

Multus-cni 自己没有分配网卡的能力,它是通过网络插件分配,比方说 calico。

Kubernetes-nmstate

K8S Worker 节点都有一块名为 ens19 的网卡,通过 Kubernetes-nmstate 在 K8S Worker 节点为 ens19 网卡创建一个名为 br-1 的网桥 。

部署

https://github.com/nmstate/kubernetes-nmstate/releases

[root@base-k8s-master-1 multus-cni]# kubectl apply -f https://github.com/nmstate/kubernetes-nmstate/releases/download/v0.83.0/namespace.yaml

namespace/nmstate created

[root@base-k8s-master-1 multus-cni]# kubectl apply -f https://github.com/nmstate/kubernetes-nmstate/releases/download/v0.83.0/service_account.yaml

serviceaccount/nmstate-operator created

[root@base-k8s-master-1 multus-cni]# kubectl apply -f https://github.com/nmstate/kubernetes-nmstate/releases/download/v0.83.0/role.yaml

clusterrole.rbac.authorization.k8s.io/nmstate-operator created

role.rbac.authorization.k8s.io/nmstate-operator created

[root@base-k8s-master-1 multus-cni]# kubectl apply -f https://github.com/nmstate/kubernetes-nmstate/releases/download/v0.83.0/role_binding.yaml

rolebinding.rbac.authorization.k8s.io/nmstate-operator created

clusterrolebinding.rbac.authorization.k8s.io/nmstate-operator created

[root@base-k8s-master-1 multus-cni]# kubectl apply -f https://github.com/nmstate/kubernetes-nmstate/releases/download/v0.83.0/operator.yaml

deployment.apps/nmstate-operator created

[root@base-k8s-master-1 multus-cni]# cat <<EOF | kubectl create -f -

apiVersion: nmstate.io/v1

kind: NMState

metadata:

name: nmstate

EOF

nmstate.nmstate.io/nmstate created

[root@base-k8s-master-1 multus-cni]# kubectl get nmstates.nmstate.io

NAME AGE

nmstate 53m创建后就可以查看每个节点的信息:

[root@base-k8s-master-1 multus-cni]# kubectl get nodenetworkstates.nmstate.io

NAME AGE

base-k8s-master-1.example.com 39m

base-k8s-worker-1.example.com 39m

base-k8s-worker-2.example.com 39m

[root@base-k8s-master-1 multus-cni]# kubectl get nodenetworkstates.nmstate.io base-k8s-master-1.example.com -o json | jq -r '.status.currentState.interfaces[].name'

ens18

kube-ipvs0

lo

tunl0

virbr0

[root@base-k8s-master-1 multus-cni]# kubectl get nodenetworkstates.nmstate.io base-k8s-worker-1.example.com -o json | jq -r '.status.currentState.interfaces[].name'

ens18

ens19

kube-ipvs0

lo

tunl0

virbr0

[root@base-k8s-master-1 multus-cni]# kubectl get nodenetworkstates.nmstate.io base-k8s-worker-2.example.com -o json | jq -r '.status.currentState.interfaces[].name'

ens18

ens19

kube-ipvs0

lo

tunl0

virbr0可以看到每个 Worker 节点都有一个 ens19 网卡,一会为这个网卡创建网桥。

创建网桥

https://github.com/nmstate/kubernetes-nmstate

先准备 NodeNetworkConfigurationPolicy 资源文件:

apiVersion: nmstate.io/v1

kind: NodeNetworkConfigurationPolicy

metadata:

name: linux-bridge-br1-ens19

spec:

nodeSelector:

linux-bridge-br1: "true"

desiredState:

interfaces:

- name: br-1

description: Linux bridge with ens19 as a port

type: linux-bridge

state: up

ipv4:

dhcp: true

enabled: true

bridge:

options:

stp:

enabled: false

port:

- name: ens19给 Worker 节点打标签,并创建 NodeNetworkConfigurationPolicy 资源:

[root@base-k8s-master-1 multus-cni]# kubectl label node base-k8s-worker-1.example.com linux-bridge-br1=true

node/base-k8s-worker-1.example.com labeled

[root@base-k8s-master-1 multus-cni]# kubectl label node base-k8s-worker-2.example.com linux-bridge-br1=true

node/base-k8s-worker-2.example.com labeled

[root@base-k8s-master-1 multus-cni]# kubectl apply -f linux-bridge-br1-ens19.yml

nodenetworkconfigurationpolicy.nmstate.io/linux-bridge-br1-ens19 created

[root@base-k8s-master-1 multus-cni]# kubectl get nodenetworkconfigurationpolicies.nmstate.io

NAME STATUS REASON

linux-bridge-br1-ens19 Available SuccessfullyConfigured检查 Worker 节点网卡:

[root@base-k8s-master-1 multus-cni]# kubectl get nodenetworkstates.nmstate.io base-k8s-worker-1.example.com -o json | jq -r '.status.currentState.interfaces[].name'

br-1

ens18

ens19

kube-ipvs0

lo

tunl0

virbr0

[root@base-k8s-master-1 multus-cni]# kubectl get nodenetworkstates.nmstate.io base-k8s-worker-2.example.com -o json | jq -r '.status.currentState.interfaces[].name'

br-1

ens18

ens19

kube-ipvs0

lo

tunl0

virbr0

[root@base-k8s-master-1 multus-cni]# ssh base-k8s-worker-1.example.com ip -br a

...output omitted...

ens19 UP

...output omitted...

br-1 UP

[root@base-k8s-master-1 multus-cni]# ssh base-k8s-worker-2.example.com ip -br a

...output omitted...

ens19 UP

...output omitted...

br-1 UP可以看到有 br-1 网桥了,接下来配置 Multus-cni。

Multus-cni

https://github.com/k8snetworkplumbingwg/multus-cni/tree/master

https://github.com/k8snetworkplumbingwg/multi-net-spec

https://github.com/containernetworking/plugins

通过 Multus-cni 为 Pod 添加第二个网卡。

部署

[root@base-k8s-master-1 multus-cni]# wget https://raw.githubusercontent.com/k8snetworkplumbingwg/multus-cni/master/deployments/multus-daemonset-thick.yml

[root@base-k8s-master-1 multus-cni]# kubectl apply -f multus-daemonset-thick.yml

customresourcedefinition.apiextensions.k8s.io/network-attachment-definitions.k8s.cni.cncf.io created

clusterrole.rbac.authorization.k8s.io/multus created

clusterrolebinding.rbac.authorization.k8s.io/multus created

serviceaccount/multus created

configmap/multus-daemon-config created

daemonset.apps/kube-multus-ds created

[root@base-k8s-master-1 multus-cni]# kubectl get pods --all-namespaces | grep -i multus

kube-system kube-multus-ds-5btgm 1/1 Running 0 5m22s

kube-system kube-multus-ds-kfssx 1/1 Running 0 5m22s

kube-system kube-multus-ds-zv8qr 1/1 Running 0 5m22s配置

准备 NetworkAttachmentDefinition 资源文件:

apiVersion: k8s.cni.cncf.io/v1

kind: NetworkAttachmentDefinition

metadata:

name: linux-bridge-br-1

namespace: default

spec:

config: '{

"name":"br-1",

"type":"bridge",

"cniVersion":"0.3.1",

"bridge":"br-1",

"macspoofchk":true,

"ipam": {

"type": "host-local",

"subnet": "192.168.255.0/24",

"routes": [

{

"dst": "0.0.0.0/0",

"gw": "192.168.255.254"

}

]

},

"preserveDefaultVlan":false

}'这个

config字段是 CNI 的配置,更多信息可以查看 CNI。

创建 NetworkAttachmentDefinition 资源:

[root@base-k8s-master-1 multus-cni]# kubectl apply -f linux-bridge-br-1.yml

networkattachmentdefinition.k8s.cni.cncf.io/linux-bridge-br-1 created

[root@base-k8s-master-1 multus-cni]# kubectl get network-attachment-definitions.k8s.cni.cncf.io

NAME AGE

linux-bridge-br-1 100s为 Pod 添加网卡

创建带有固定网卡的 Pod

准备测试 Pod 资源文件:

apiVersion: v1

kind: Pod

metadata:

name: multus-demo

annotations:

k8s.v1.cni.cncf.io/networks: '[

{ "name": "linux-bridge-br-1",

"ips": [ "192.168.255.100/24" ],

"mac": "c2:b0:57:49:47:f1"

}]'

spec:

containers:

- name: busybox

image: busybox

imagePullPolicy: IfNotPresent

command:

- "sh"

- "-c"

- "sleep 3600"重要是

name字段,只指定name也可以分配网卡。

创建这个 Pod 来测试:

[root@base-k8s-master-1 multus-cni]# kubectl apply -f multus-demo.yml

pod/multus-demo created

[root@base-k8s-master-1 multus-cni]# kubectl exec -it multus-demo -- ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

3: eth0@if94: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1480 qdisc noqueue qlen 1000

link/ether 76:e7:f6:4a:6d:16 brd ff:ff:ff:ff:ff:ff

inet 10.100.171.22/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::74e7:f6ff:fe4a:6d16/64 scope link

valid_lft forever preferred_lft forever

4: net1@if95: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether c2:b0:57:49:47:f1 brd ff:ff:ff:ff:ff:ff

inet 192.168.255.100/24 brd 192.168.255.255 scope global net1

valid_lft forever preferred_lft forever

inet6 fe80::c0b0:57ff:fe49:47f1/64 scope link

valid_lft forever preferred_lft forever

[root@base-k8s-master-1 ~]# kubectl exec -it multus-demo -- ip route

default via 192.168.255.254 dev net1

default via 169.254.1.1 dev eth0

169.254.1.1 dev eth0 scope link

192.168.255.0/24 dev net1 scope link src 192.168.255.100可以看到多了一个 net1@if95,MAC 为 c2:b0:57:49:47:f1,IP 地址为 192.168.255.100。

创建 Pod 后对应的物理节点会有新的网卡出现:

[root@base-k8s-worker-2 ~]# ip -br a

...output omitted...

br-1 UP fe80::c295:80a4:a26a:6fc4/64

calidb4c3e9a0b9@if3 UP fe80::ecee:eeff:feee:eeee/64

veth2c6fbec4@if4 UP fe80::bcad:b1ff:fee0:a928/64测试

有一个 PVE 服务器,和 K8S Worker 节点的 ens19 连接,地址是 192.168.255.11。

root@pve-1:~# ip addr show vmbr100

319: vmbr100: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 74:9d:8f:85:da:8a brd ff:ff:ff:ff:ff:ff

inet 192.168.255.11/24 scope global vmbr100

valid_lft forever preferred_lft forever

inet6 fe80::4811:9cff:fe16:449f/64 scope link

valid_lft forever preferred_lft forever通过 PVE 服务器 ping 上边容器的 192.168.255.100:

root@pve-1:~# ping -c4 192.168.255.100

PING 192.168.255.100 (192.168.255.100) 56(84) bytes of data.

64 bytes from 192.168.255.100: icmp_seq=1 ttl=64 time=0.310 ms

64 bytes from 192.168.255.100: icmp_seq=2 ttl=64 time=0.226 ms

64 bytes from 192.168.255.100: icmp_seq=3 ttl=64 time=0.192 ms

64 bytes from 192.168.255.100: icmp_seq=4 ttl=64 time=0.199 ms

--- 192.168.255.100 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3105ms

rtt min/avg/max/mdev = 0.192/0.231/0.310/0.046 ms