KubeVirt 磁盘类型

KubeVirt 磁盘有四种类型:

-

Lun:可以将 ISCSI 或 FC 存储直通给虚拟机,不会经过 QEMU。

- 直接访问存储:允许虚拟机直接访问存储后端的裸 LUN,避免文件系统或块设备的额外开销,提高性能。

- 适用于企业级存储:适用于 iSCSI、Fibre Channel(FC)、NVMe-oF 等存储后端,可以直接将存储卷映射到 VM。

-

Disk:创建一个虚拟磁盘给虚拟机用,经过 QEMU。

- 支持不同磁盘总线:如

virtio、sata、scsi、ide。 - 支持文件系统存储

- 支持多种存储后端:如 Ceph RBD、NFS、iSCSI。

- 支持不同磁盘总线:如

-

Cdrom:挂载一个光盘。

- 引导 VM 安装操作系统:通过 ISO 启动并安装 OS。

-

Filesystems:

- 高性能:相比 NFS,Virtio-FS 通过

vhost-user-fs直接访问宿主机文件系统,减少 CPU 负担。 - 低延迟:通过 DAX 技术,VM 可以直接映射宿主机内存,提高 I/O 性能。

- 无网络依赖:不像 NFS 或 CephFS,

virtiofs不需要网络连接,适用于高性能存储需求。 - 支持 POSIX 兼容性:支持标准 Linux 文件权限、符号链接等。

- KVM 原生支持:

virtiofs在 KVM/QEMU 内核级支持,适用于 KubeVirt。

- 高性能:相比 NFS,Virtio-FS 通过

四种磁盘类型创建

测试虚拟机的 YAML 文件

---

apiVersion: cdi.kubevirt.io/v1beta1

kind: DataVolume

metadata:

name: "fedora"

spec:

storage:

storageClassName: csi-rbd-sc

resources:

requests:

storage: 5Gi

source:

http:

url: "https://download.fedoraproject.org/pub/fedora/linux/releases/41/Cloud/x86_64/images/Fedora-Cloud-Base-Generic-41-1.4.x86_64.qcow2"

---

apiVersion: kubevirt.io/v1

kind: VirtualMachine

metadata:

name: vm-dv

namespace: default

spec:

runStrategy: Always

template:

spec:

architecture: amd64

domain:

devices:

disks:

- disk:

name: containerdisk

- disk:

name: cloudinitdisk

interfaces:

- masquerade: {}

name: default

machine:

type: q35

resources:

requests:

memory: 512Mi

networks:

- name: default

pod: {}

volumes:

- DataVolume:

name: fedora

name: containerdisk

- cloudInitNoCloud:

userData: |-

#cloud-config

chpasswd: { expire: False }

hostname: vm-dv

fqdn: vm-dv.example.com

password: fedora

ssh_authorized_keys:

- ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQCqK9cDuDDHO8NNIDhXvxcIAUDrzC+RY8ANhrXJ3fe7PGbslBNXBiPpNZNUahIUez4qrz92MXDOhyywf0OTjIYFsWqmof0ytCRzZeBRpeE0uEQIj793YN52yKIbQ797mThqmOCFAFx3ES9+/HtB6H/PWYGMvhyqmiFYNu46ttEKlu8TGQ6nh79eSc1AK+YT1UMVRm06aGp5mfhp2AuL5KTPsPHuJ9XXgPeam2rnaE1erde912qxX6k4PWtQknZ7ZSzlzgfbO2vVheNYoG9SLTZQHbvOws75pfHdI1T2SF9N3WeNRaIySBCGqxhPihavHa+Lg94kxFxLpqMdyKJzKpfT5NSpZZN1qV62JQbRWDzyOcio4ZLmUalF7IXaiD62wvYN64vS90zvUYxr4Gfzkrx9/Cp4+qPBS00KlJGcVU1vfQzdUv57HqVlYQN4Y0tqT7sYAa9/9tnSs/fYPol0NBYbuqxatmRaJltMbs46bOO6plYQJm6RCYbdanh7BqMcg4M= [email protected]

runcmd:

- 'sudo echo "The virtual machine initialization time is $(date "+%F %T")" > /tmp/install.log'

bootcmd:

- 'sudo echo "The virtual machine startup time is $(date "+%F %T")" > /tmp/boot.log'

name: cloudinitdiskLun

前置准备

准备一个虚拟机提供 iSCSI:

# 创建逻辑卷

[root@awx-1 ~]# pvcreate /dev/vda

WARNING: xfs signature detected on /dev/vda at offset 0. Wipe it? [y/n]: y

Wiping xfs signature on /dev/vda.

Physical volume "/dev/vda" successfully created.

[root@awx-1 ~]# vgcreate iscsi /dev/vda

Volume group "iscsi" successfully created

[root@awx-1 ~]# lvcreate -l 100%FREE --name iscsi_lvm iscsi

Logical volume "iscsi_lvm" created.

# 安装 targetcli 并启动相关服务

[root@awx-1 ~]# dnf install targetcli -y

[root@awx-1 ~]# systemctl start targetclid

# 创建 iSCSI

[root@awx-1 ~]# targetcli

targetcli shell version 2.1.53

Copyright 2011-2013 by Datera, Inc and others.

For help on commands, type 'help'.

# 创建服务端 IQN

/> iscsi/ create

Created target iqn.2003-01.org.linux-iscsi.awx-1.x8664:sn.939f5bb149d6.

Created TPG 1.

Global pref auto_add_default_portal=true

Created default portal listening on all IPs (0.0.0.0), port 3260.

# 创建 LUN

/> iscsi/iqn.2003-01.org.linux-iscsi.awx-1.x8664:sn.939f5bb149d6/tpg1/luns create /dev/iscsi/iscsi_lvm

Created storage object dev-iscsi-iscsi_lvm.

Created LUN 0.

# 添加主机

/> iscsi/iqn.2003-01.org.linux-iscsi.awx-1.x8664:sn.939f5bb149d6/tpg1/acls create iqn.2003-01.org.linux-iscsi.kubevirt:linux

Created Node ACL for iqn.2003-01.org.linux-iscsi.kubevirt:linux

Created mapped LUN 0.

# 修改监听地址

/> iscsi/iqn.2003-01.org.linux-iscsi.awx-1.x8664:sn.939f5bb149d6/tpg1/portals/ delete 0.0.0.0 3260

Deleted network portal 0.0.0.0:3260

/> iscsi/iqn.2003-01.org.linux-iscsi.awx-1.x8664:sn.939f5bb149d6/tpg1/portals/ create 192.168.50.120 3260

Using default IP port 3260

Created network portal 192.168.50.120:3260.

# 保存配置并退出

/> saveconfig

Last 10 configs saved in /etc/target/backup/.

Configuration saved to /etc/target/saveconfig.json

/> exit

Global pref auto_save_on_exit=true

Last 10 configs saved in /etc/target/backup/.

Configuration saved to /etc/target/saveconfig.json创建一个使用 iSCSI 的 PVC

准备 PV 和 PVC 的 YAML 文件

PV

apiVersion: v1

kind: PersistentVolume

metadata:

name: iscsi-pv

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteMany

volumeMode: Block

persistentVolumeReclaimPolicy: Retain

storageClassName: ""

iscsi:

iqn: iqn.2003-01.org.linux-iscsi.awx-1.x8664:sn.939f5bb149d6

targetPortal: 192.168.50.120:3260

lun: 0

readOnly: false

initiatorName: iqn.2003-01.org.linux-iscsi.kubevirt:linux

chapAuthSession: false这里的

targetPortal要使用 IP 地址,不能使用 FQDN 的域名。

PVC

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: iscsi-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 5Gi

storageClassName: ""

volumeName: iscsi-pv

volumeMode: Block创建:

# 创建 PV

[root@base-k8s-master-1 kubevirt]# kubectl apply -f iscsi-pv.yml

persistentvolume/iscsi-pv created

[root@base-k8s-master-1 kubevirt]# kubectl get pv iscsi-pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS VOLUMEATTRIBUTESCLASS REASON AGE

iscsi-pv 5Gi RWX Retain Available <unset> 53s

# 创建 PVC

[root@base-k8s-master-1 kubevirt]# kubectl apply -f iscsi-pvc.yml

persistentvolumeclaim/iscsi-pvc created

# 检查状态

[root@base-k8s-master-1 kubevirt]# kubectl get pvc iscsi-pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

iscsi-pvc Bound iscsi-pv 5Gi RWX <unset> 77s

[root@base-k8s-master-1 kubevirt]# kubectl get pv iscsi-pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS VOLUMEATTRIBUTESCLASS REASON AGE

iscsi-pv 5Gi RWX Retain Bound default/iscsi-pvc <unset> 9m46s测试

apiVersion: kubevirt.io/v1

kind: VirtualMachine

metadata:

name: vm-storage

namespace: default

spec:

runStrategy: Always

template:

spec:

architecture: amd64

domain:

devices:

disks:

- disk:

name: containerdisk

- name: mypvcdisk

lun:

bus: scsi

interfaces:

- masquerade: {}

name: default

machine:

type: q35

resources:

requests:

memory: 512Mi

networks:

- name: default

pod: {}

volumes:

- DataVolume:

name: fedora

name: containerdisk

- name: mypvcdisk

persistentVolumeClaim:

claimName: iscsi-pvc创建虚拟机后查看磁盘:

[root@base-k8s-master-1 kubevirt]# virtctl console vm-storage

Successfully connected to vm-storage console. The escape sequence is ^]

[root@vm-dv ~]# lsscsi

[0:0:0:0] disk ATA QEMU HARDDISK 2.5+ /dev/sda

[6:0:0:1] disk LIO-ORG IBLOCK 4.0 /dev/sdb用 Ceph RBD PVC 做 Lun 磁盘

用 Ceph RBD PVC 做 LUN 磁盘并查看 Pod 日志:

[root@base-k8s-master-1 kubevirt]# kubectl logs virt-launcher-vm-storage-f94pm

...output omitted...

{"component":"virt-launcher","kind":"","level":"error","msg":"Failed to sync vmi","name":"vm-storage","namespace":"default","pos":"server.go:202","reason":"virError(Code=1, Domain=10, Message='internal error: QEMU unexpectedly closed the monitor (vm='default_vm-storage'): 2025-03-11T11:26:00.934253Z qemu-kvm: warning: Deprecated CPU topology (considered invalid): Unsupported clusters parameter mustn't be specified as 1\n2025-03-11T11:26:00.958545Z qemu-kvm: -device {\"driver\":\"scsi-block\",\"bus\":\"scsi0.0\",\"channel\":0,\"scsi-id\":0,\"lun\":1,\"drive\":\"libvirt-1-storage\",\"id\":\"ua-mypvcdisk\",\"werror\":\"stop\",\"rerror\":\"stop\"}: cannot get SG_IO version number: Inappropriate ioctl for device\nIs this a SCSI device?')","timestamp":"2025-03-11T11:26:01.162626Z","uid":"f3d2265d-cfdc-4c1d-895d-589cf8850318"}可以看到报错 cannot get SG_IO version number: Inappropriate ioctl for device\nIs this a SCSI device?')。

说明想用 Lun 磁盘,得是 ISCSI 或者 FC 这种存储的 PVC 才行。

TroubleShooting

创建虚拟时 Pod 报错

[root@base-k8s-master-1 kubevirt]# kubectl describe pod virt-launcher-vm-storage-fhvqn

...output omitted...

Warning FailedMapVolume <invalid> (x3 over <invalid>) kubelet MapVolume.WaitForAttach failed for volume "iscsi-pv" : failed to get any path for iscsi disk, last err seen:

<nil>原因在于 PV 的 targetPortal 要使用 IP 地址,不能使用域名。

LUN 需要修改驱动模式

[root@base-k8s-master-1 kubevirt]# kubectl logs virt-launcher-vm-storage-rpnfd

...output omitted...

{"component":"virt-launcher","kind":"","level":"error","msg":"Failed to sync vmi","name":"vm-storage","namespace":"default","pos":"server.go:202","reason":"virError(Code=67, Domain=10, Message='unsupported configuration: disk device='lun' is not supported for bus='sata'')","timestamp":"2025-03-11T10:17:36.259358Z","uid":"06b478e4-fcff-4930-84dc-b7c828232c86"}修改设置 LUN 驱动为 scsi。

- name: mypvcdisk

lun: {}

# 修改为

- name: mypvcdisk

lun:

bus: scsiDisk

创建

添加 Disk 磁盘之前先创建一个块的 PVC:

[root@base-k8s-master-1 kubevirt]# cat disk-pvc.yml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: disk-pvc

namespace: default

spec:

accessModes:

- ReadWriteMany

storageClassName: csi-rbd-sc

volumeMode: Block

resources:

requests:

storage: 1Gi添加磁盘:

apiVersion: kubevirt.io/v1

kind: VirtualMachine

metadata:

name: vm-storage

namespace: default

spec:

runStrategy: Always

template:

spec:

architecture: amd64

domain:

devices:

disks:

- disk:

name: containerdisk

- name: mypvcdisk

disk:

bus: virtio

interfaces:

- masquerade: {}

name: default

machine:

type: q35

resources:

requests:

memory: 512Mi

networks:

- name: default

pod: {}

volumes:

- DataVolume:

name: fedora

name: containerdisk

- name: mypvcdisk

persistentVolumeClaim:

claimName: disk-pvc测试

[root@base-k8s-master-1 kubevirt]# kubectl apply -f disk-pvc.yml

persistentvolumeclaim/disk-pvc created

[root@base-k8s-master-1 kubevirt]# kubectl apply -f vm-storage.yaml

virtualmachine.kubevirt.io/vm-storage created

[root@base-k8s-master-1 kubevirt]# virtctl console vm-storage

Successfully connected to vm-storage console. The escape sequence is ^]

login as 'cirros' user. default password: 'gocubsgo'. use 'sudo' for root.

cirros login: cirros

Password:

$ lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

vda 253:0 0 44M 0 disk

|-vda1 253:1 0 35M 0 part /

`-vda15 253:15 0 8M 0 part

vdb 253:16 0 1G 0 disk

# 这是个 virtio 的,lsscsi 看不到Cdrom

利用 CDI 导入一个 ISO

apiVersion: cdi.kubevirt.io/v1beta1

kind: DataVolume

metadata:

name: "fedora-iso"

spec:

storage:

storageClassName: csi-rbd-sc

resources:

requests:

storage: 3Gi

source:

http:

url: https://download.fedoraproject.org/pub/fedora/linux/releases/41/Server/x86_64/iso/Fedora-Server-dvd-x86_64-41-1.4.iso通过 kubectl apply -f 导入后会创建名为 fedora-iso 的 PVC:

[root@base-k8s-master-1 kubevirt]# kubectl get DataVolumes.cdi.kubevirt.io fedora-iso

NAME PHASE PROGRESS RESTARTS AGE

fedora-iso Succeeded 100.0% 20h

[root@base-k8s-master-1 kubevirt]# kubectl get pvc fedora-iso

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

fedora-iso Bound pvc-9447d289-8665-45c6-8850-4afdd2a730a9 3Gi RWX csi-rbd-sc <unset> 20h创建一个带有 Cdrom 的虚拟机

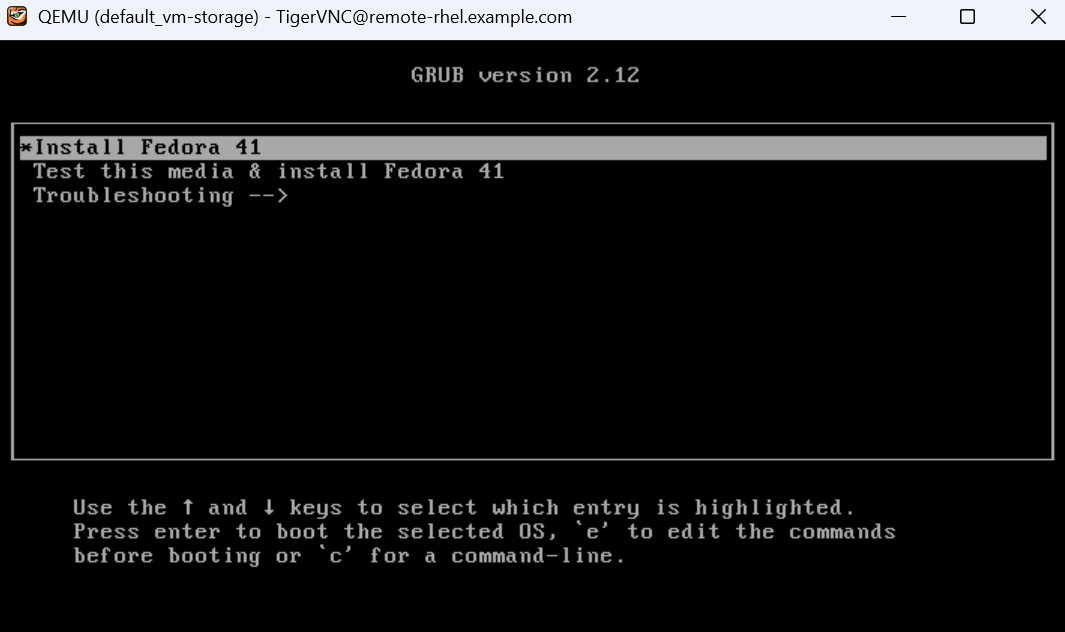

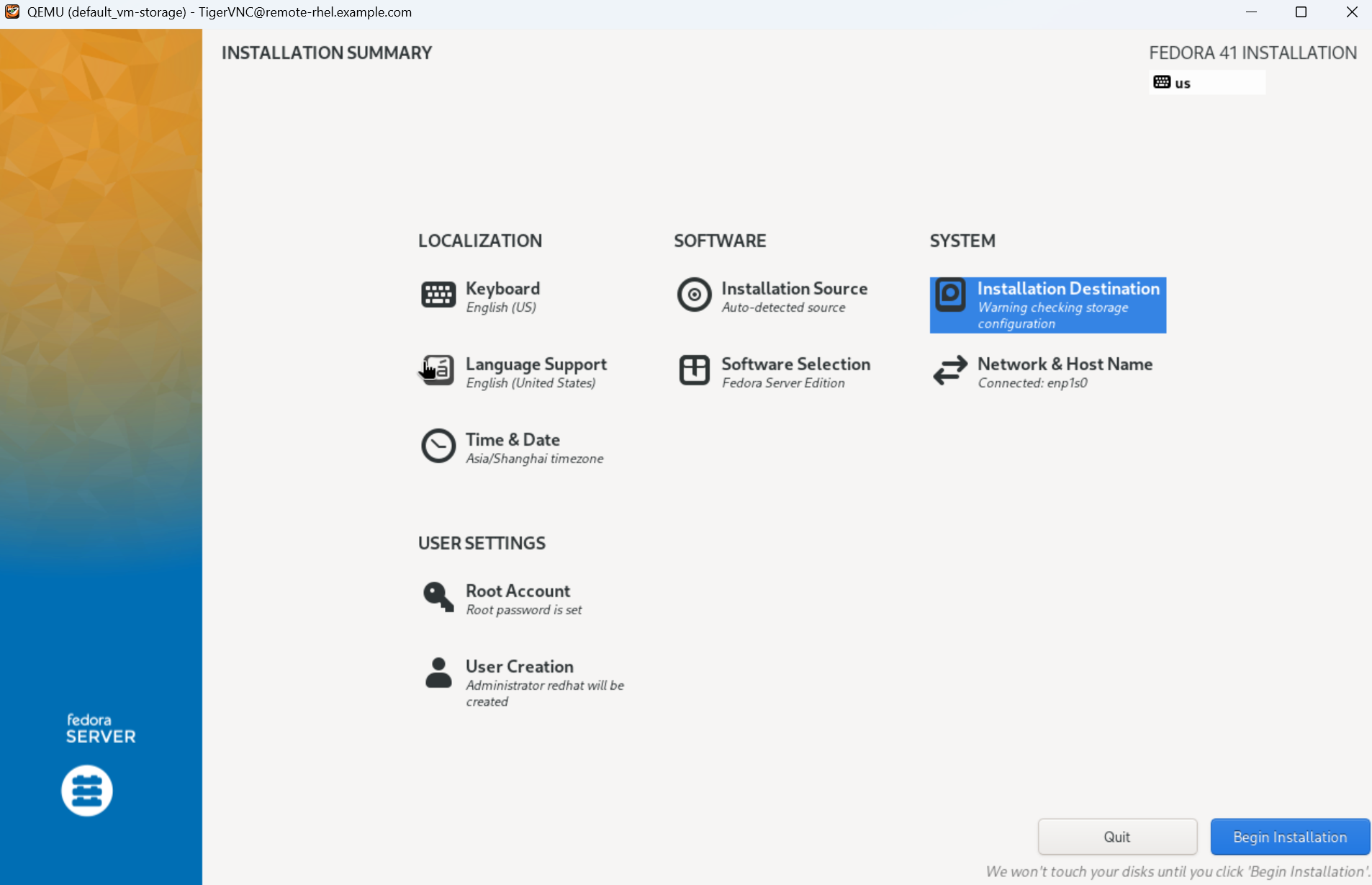

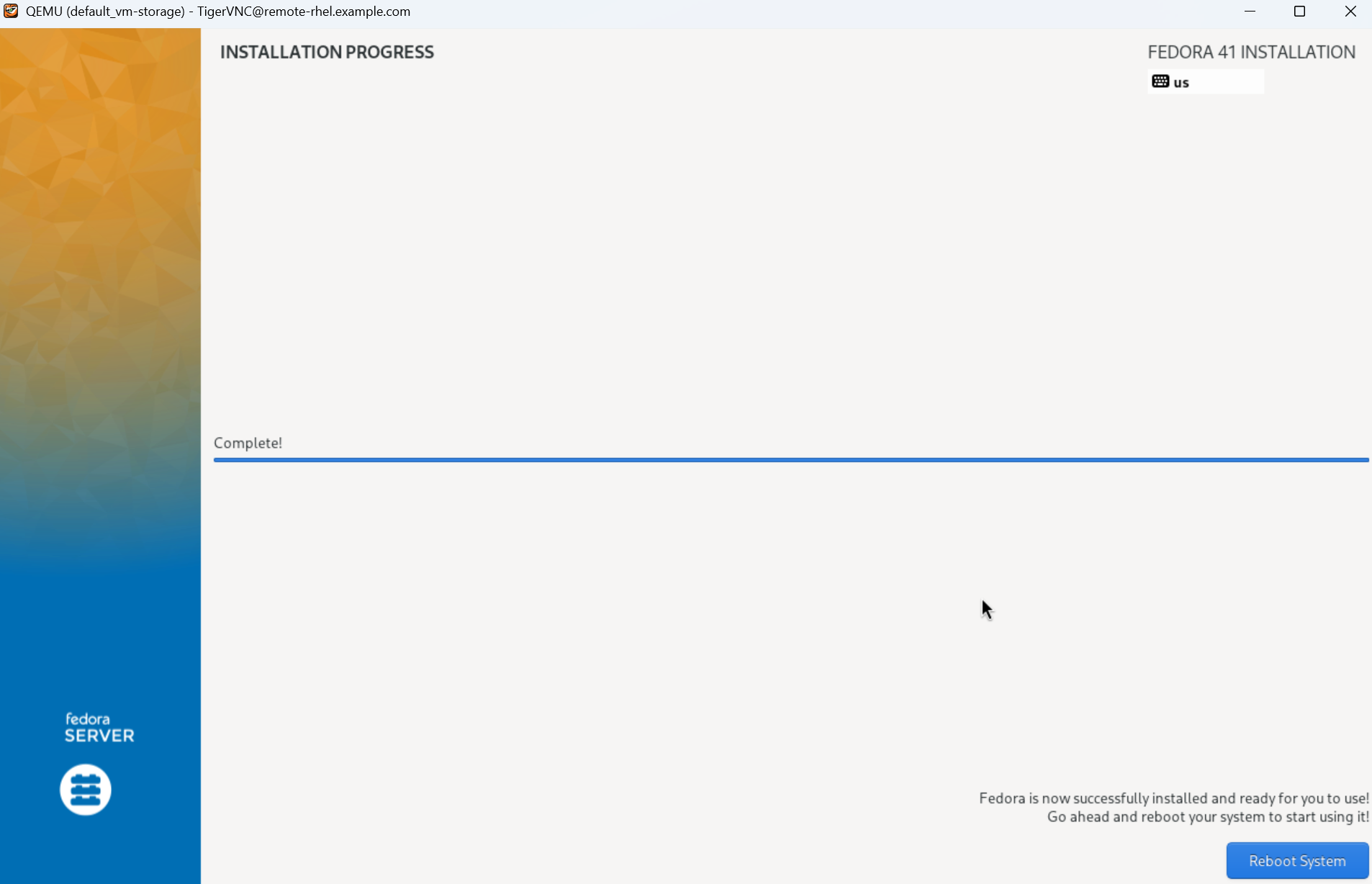

为了测试 Cdrom,利用 bootOrder: 1 将导入的 ISO 设置为启动盘,测试是否能进行系统安装:

apiVersion: kubevirt.io/v1

kind: VirtualMachine

metadata:

name: vm-storage

namespace: default

spec:

runStrategy: Always

template:

spec:

architecture: amd64

domain:

devices:

disks:

- disk:

name: containerdisk

- name: mypvcdisk

lun:

bus: scsi

- name: mypvcdisk-iso

bootOrder: 1

cdrom:

bus: sata

readonly: false

interfaces:

- masquerade: {}

name: default

machine:

type: q35

memory:

guest: 2048Mi

resources:

requests:

memory: 2048Mi

networks:

- name: default

pod: {}

volumes:

- DataVolume:

name: fedora

name: containerdisk

- name: mypvcdisk

persistentVolumeClaim:

claimName: iscsi-pvc

- name: mypvcdisk-iso

persistentVolumeClaim:

claimName: fedora-iso测试

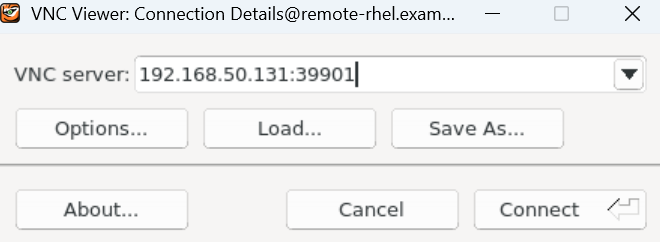

因为要测试 ISO 安装,所以得启一个图形化,这里用 VNC 来测试:

在 Kubernetes 节点使用 virtctl 开一个 VNC 代理:

[root@base-k8s-master-1 ~]# virtctl vnc --address 192.168.50.131 --proxy-only --port 39901 vm-storage

{"port":39901}

{"component":"portforward","level":"info","msg":"connection timeout: 1m0s","pos":"vnc.go:159","timestamp":"2025-03-12T12:25:16.747595Z"}

{"component":"portforward","level":"info","msg":"VNC Client connected in 1.495207817s","pos":"vnc.go:172","timestamp":"2025-03-12T12:25:18.242778Z"}再找一个节点用 vncviewer 访问:

[root@remote-rhel ~]# vncviewer

TigerVNC Viewer v1.14.1

Built on: 2024-11-08 00:00

Copyright (C) 1999-2024 TigerVNC Team and many others (see README.rst)

See https://www.tigervnc.org for information on TigerVNC.下面看几个图片过程:

Filesystem

创建

需要创建一个 Filesystem 类型的 PVC,用之前 ISCSi 的方法创建就行。

这个对虚拟机内核有要求,RHEL 系列需要 4.18 以上,其他 Linux 需要 5.4 以上。

可以用

modprobe virtiofs检查虚拟机是否支持。

虚拟机配置:

apiVersion: kubevirt.io/v1

kind: VirtualMachine

metadata:

name: vm-storage

namespace: default

spec:

runStrategy: Always

template:

spec:

architecture: amd64

domain:

devices:

filesystems:

- name: mypvcdisk-filesystem

virtiofs: {}

disks:

- disk:

name: containerdisk

bootOrder: 1

- name: mypvcdisk

lun:

bus: scsi

interfaces:

- masquerade: {}

name: default

machine:

type: q35

memory:

guest: 2048Mi

resources:

requests:

memory: 2048Mi

networks:

- name: default

pod: {}

volumes:

- DataVolume:

name: fedora

name: containerdisk

- name: mypvcdisk

persistentVolumeClaim:

claimName: iscsi-pvc

- name: mypvcdisk-filesystem

persistentVolumeClaim:

claimName: iscsi-filesystem-pvc测试

[root@vm-dv ~]# uname -r

6.11.4-301.fc41.x86_64

[root@vm-dv ~]# mount -t virtiofs mypvcdisk-filesystem /mnt/test/

[ 322.063824] SELinux: (dev virtiofs, type virtiofs) has no security xattr handler

[ 322.071237] SELinux: (dev virtiofs, type virtiofs) falling back to genfs

[root@vm-dv ~]# df -Th /mnt/test/

Filesystem Type Size Used Avail Use% Mounted on

mypvcdisk-filesystem virtiofs 3.0G 54M 3.0G 2% /mnt/test查看虚拟机配置

[root@base-k8s-master-1 kubevirt]# kubectl exec -it virt-launcher-vm-storage-mklnt -- /bin/bash

bash-5.1$ lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

...output omitted...

sdb 8:16 0 3G 0 disk /run/kubevirt-private/vmi-disks/mypvcdisk-filesystem

...output omitted...

bash-5.1$ ls -l /var/ | grep run

lrwxrwxrwx 1 root root 6 Jan 1 1970 run -> ../run

bash-5.1$ virsh list

Authorization not available. Check if polkit service is running or see debug message for more information.

Id Name State

------------------------------------

1 default_vm-storage running

bash-5.1$ virsh dumpxml default_vm-storage

...output omitted...

<filesystem type='mount'>

<driver type='virtiofs' queue='1024'/>

<source socket='/var/run/kubevirt/virtiofs-containers/mypvcdisk-filesystem.sock'/>

<target dir='mypvcdisk-filesystem'/>

<alias name='fs0'/>

<address type='pci' domain='0x0000' bus='0x01' slot='0x00' function='0x0'/>

</filesystem>

...output omitted...